Introduction:

A benefit of relying on managed cloud services to execute our code is that it offloads a lot of the knowledge and headaches of the overall system. We don’t need to know what a FireCracker VM is and we don’t need to know how the Lambda service scales it’s VMs to handle load for example. However this layer of abstraction can become a problem when our applications behave in unpredictable ways. For example, retry logic when things go wrong.

The reason this is a complex problem to solve is two-fold:

- Synchronous and asynchronous Lambda function executions behave very differently.

- Our architectures commonly rely on decoupling mechanisms such as EventBridge, SNS or SQS and these managed services also have their own retry mechanisms.

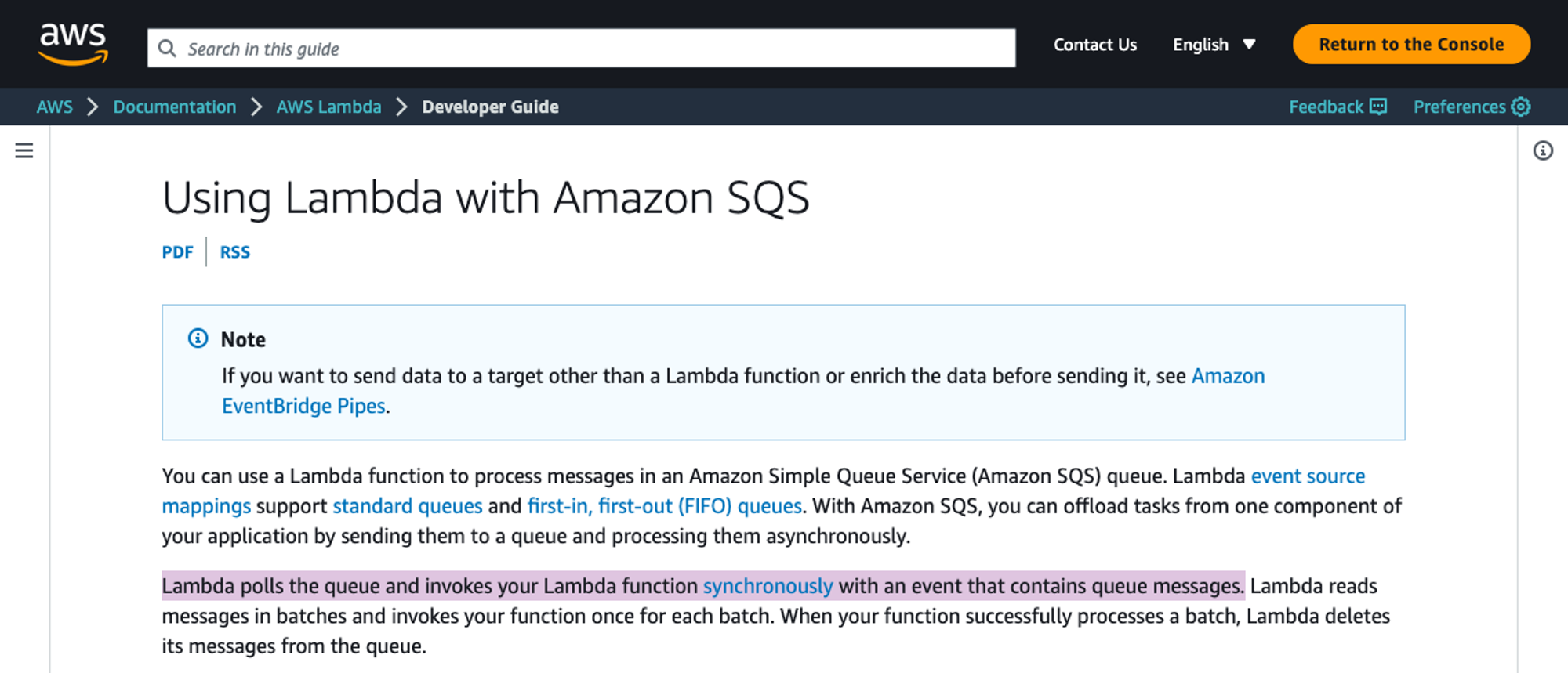

Here is some evidence that the industry itself is still confused. The AWS documentation for invoking Lambda functions from SQS queues states that “Lambda polls the queue and invokes your Lambda function synchronously with an event that contains queue messages.”

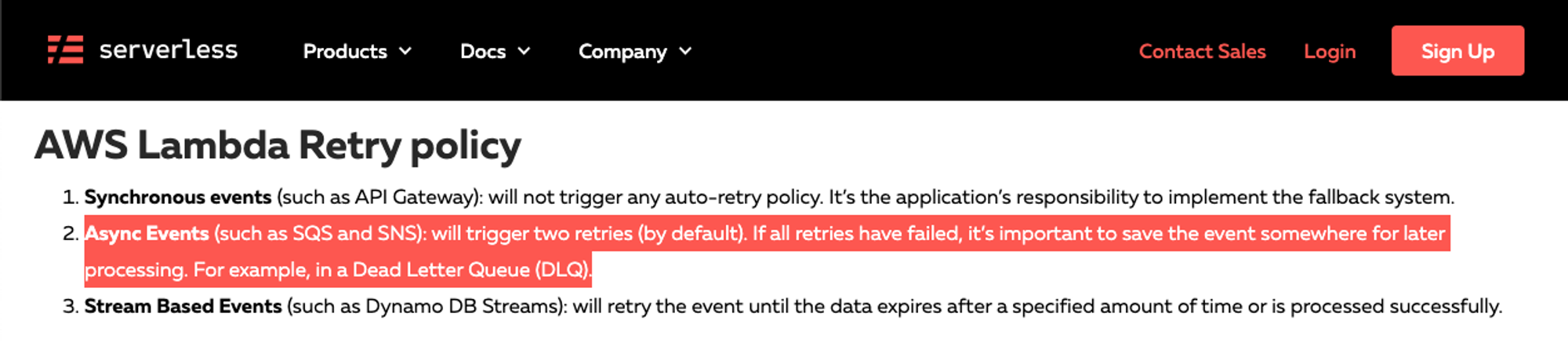

However the Serverless Framework have a guide/tutorial stating that SQS queues trigger Lambda functions asynchronously.

So the question to answer today is:

When there is a failure, how many times is my code rerun?

— Every Cloud Developer ever

Sync vs Async

For those unfamiliar with the two invocation types, it is important that we distinguish the differences between the synchronous and asynchronous invocation of a Lambda function. The AWS documentation summarises them as:

“In synchronous invocations, the caller waits for the function to complete execution and the function can return a value. In asynchronous operation, the caller places the event on an internal queue, which is then processed by the Lambda function.”.

Why is this relevant to a discussion on retry logic?

Because the two invocation types handle errors differently. Asynchronous invocations can be configured to retry 0-2 times, and synchronous invocations do not retry. To add more fuel to the fire, all the AWS Lambda service integrations invoke lambda functions in their way (sync/async) can also have their own retry logic on top of this.

Comparison Table

This comparison table is an attempt at curating all the different retry mechanisms in one place. Allowing you to compare different service integrations and understand how you can configure retries in each scenario.

| Invocation Type | Retry Count | How To Configure | |

|---|---|---|---|

| Lambda Invocation (Sync) | Synchronous | None (retry from client) | Build retry logic in client software |

| Lambda Invocation (Async) | Asynchronous | 0-2 (default of 2) | AWS::Lambda::EventInvokeConfig - MaximumRetryAttempts attribute |

| SNS Topic (Async) | Asynchronous | 0-2 (default of 2) | AWS::SNS::Subscription - DeliveryPolicy attribute |

| SNS Topic + SQS | Synchronous | Constant retries until either message retention period is reached or “max receive count” is exceeded | AWS::SQS::Queue - MessageRetentionPeriod and RedrivePolicy attributes |

| DynamoDB Stream | Synchronous | Constant retries until record expires (24 hours) | Cannot configure |

| EventBridge | Asynchronous | Configurable up to 185 times over 24 hours (exponential backoff) | AWS::Events::Rule - Targets attribute (nested within each target as RetryPolicy) |

Implications

Why do we even care about our code retrying? Surely any retried attempts are simply a bonus attempt at progressing our workflows, right?

These are the three potential issues:

- Lambda throttling - If a lambda function is failing when invoked from EventBridge, by default that function will be retried 185 times over the next 24 hours. Not a big deal given the default concurrency for Lambda in a region is 1000. However, if there is an underlying issue causing all your functions to fail in this workflow, and you have 10’s or 100’s of events entering EventBridge a second, your 185x invocations are at risk of throttling your Lambda regional service and bringing down other applications.

- Pricing - More lambda invocations = bigger bill. If Lambda is simply retrying with no hope of a success, you are simply giving money away.

- Idempotency - If your Lambda function is throwing an error halfway through executing it’s code, maybe you have data stores or state management in “limbo”. Retrying a Lambda function in this state is either doomed to fail, or worse, cause more havoc. Imagine if you had a single Lambda function to execute a customer payment transaction then send a confirmation email. If the confirmation email mechanism was causing failures, Lambda would retry countless times and potentially force multiple payments for that customer.

Conclusion

Focusing development efforts on the happy path can be a naive approach to building serverless applications.

Considerations need to be made for when things go wrong.

Proneness to intermittent failures mean you do need Lambda retries, so they aren’t necessarily a bad thing. But, it’s critical to build idempotent logic where possible, set up the necessary monitoring so Lambda failures/retries don’t go unnoticed, and ensure you’ve raised your Lambda concurrency soft limit accordingly.